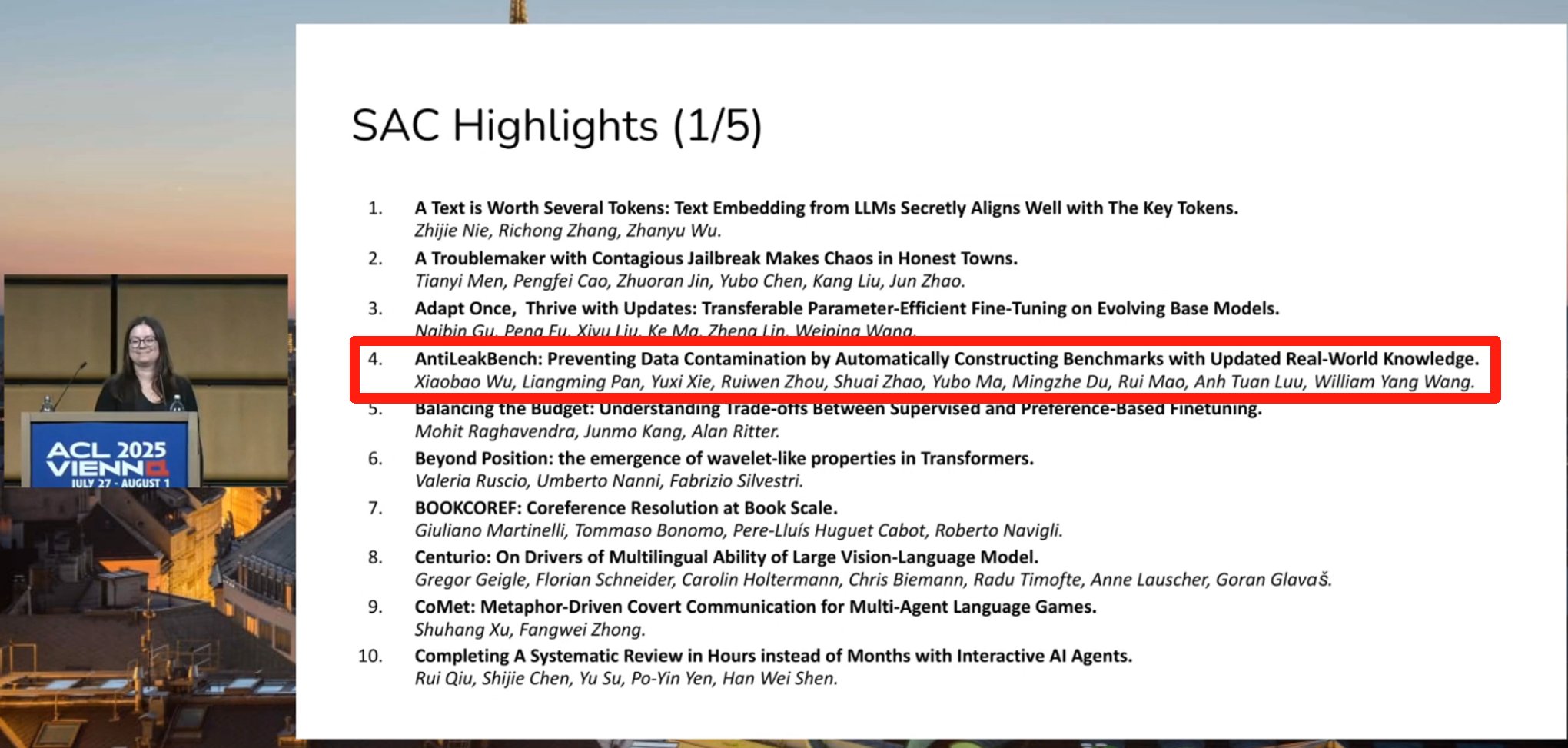

We are thrilled to announce that our paper “AntiLeakBench: Preventing Data Contamination by Automatically Constructing Benchmarks with Updated Real-World Knowledge” has received the SAC Highlights Award at ACL 2025! 🎉

About the Paper

Large language models (LLMs) face serious risks of data contamination, which compromises the fairness and reliability of benchmark evaluations. AntiLeakBench tackles this challenge by:

- Constructing samples with explicitly new knowledge absent from LLM training sets, ensuring contamination-free evaluation.

- Automating benchmark updates without the need for human effort.

- Enabling fair, low-cost, and scalable evaluation for emerging LLMs.

This framework provides the community with a practical and sustainable solution to ensure trustworthy and future-proof evaluation of LLMs.

📅 Awarded on: July 31, 2025

📍 Conference: ACL 2025 @ Vienna, Austria

✍️ Authors: Xiaobao Wu, Liangming Pan, Yuxi Xie, Ruiwen Zhou, Shuai Zhao, Yubo Ma, Mingzhe Du, Rui Mao, Anh Tuan Luu, William Yang Wang